About Me

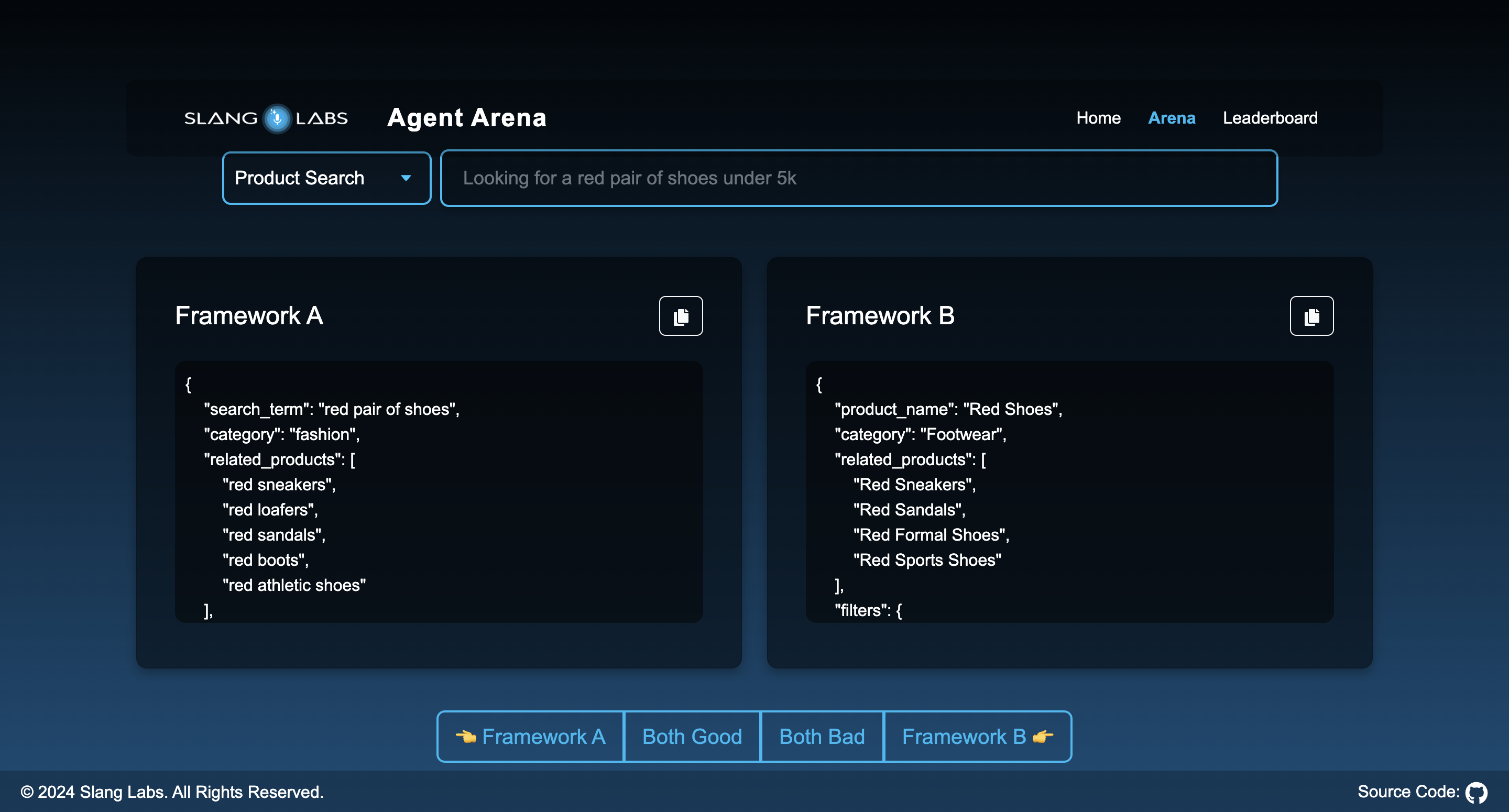

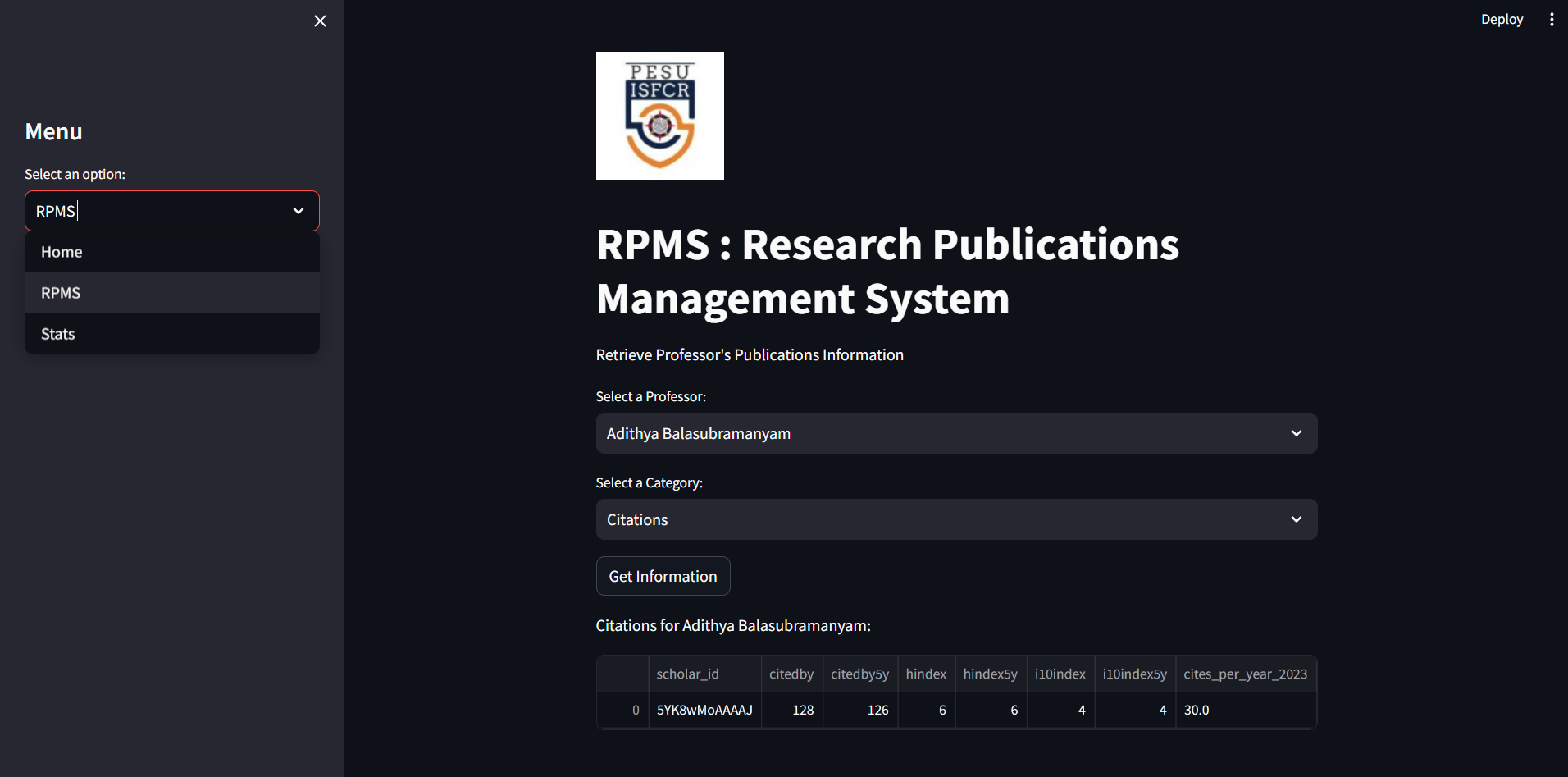

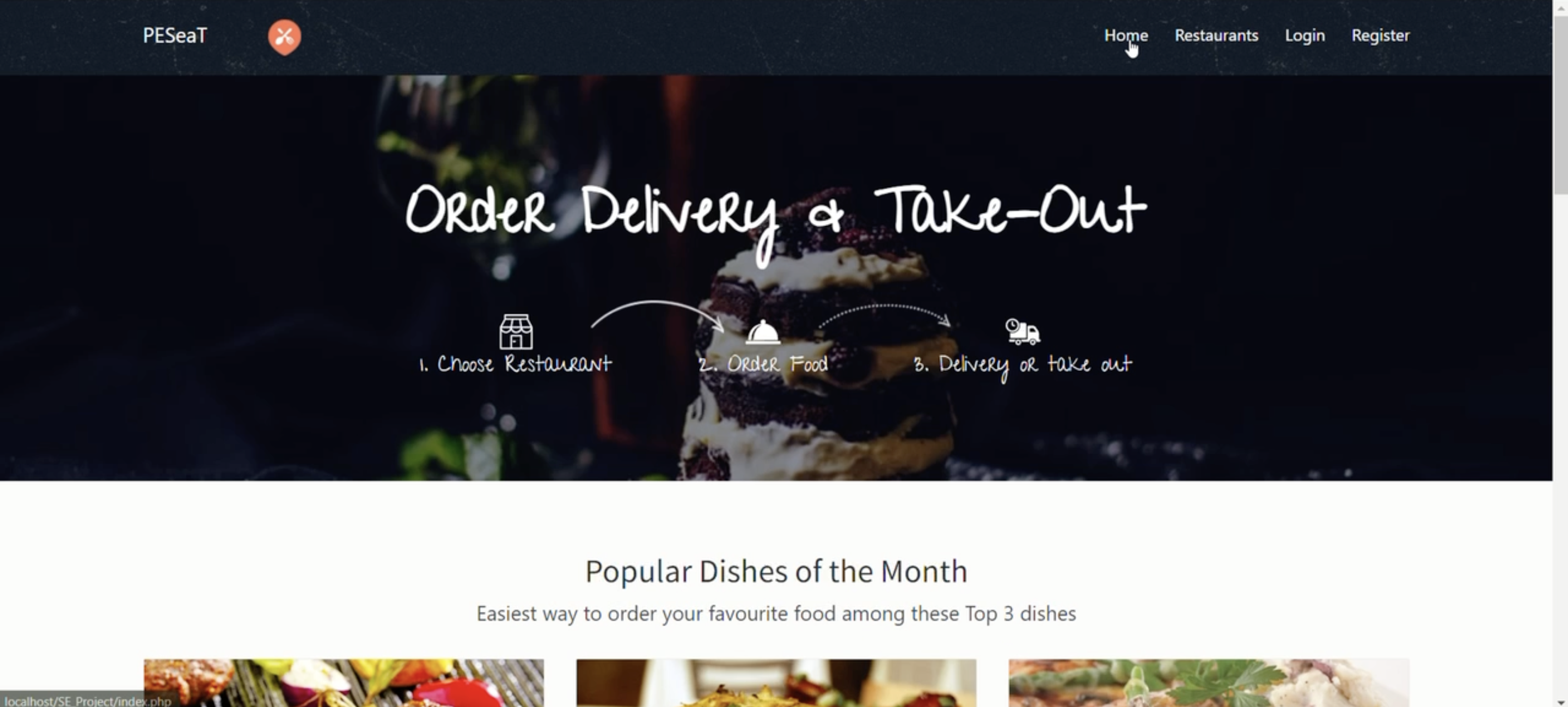

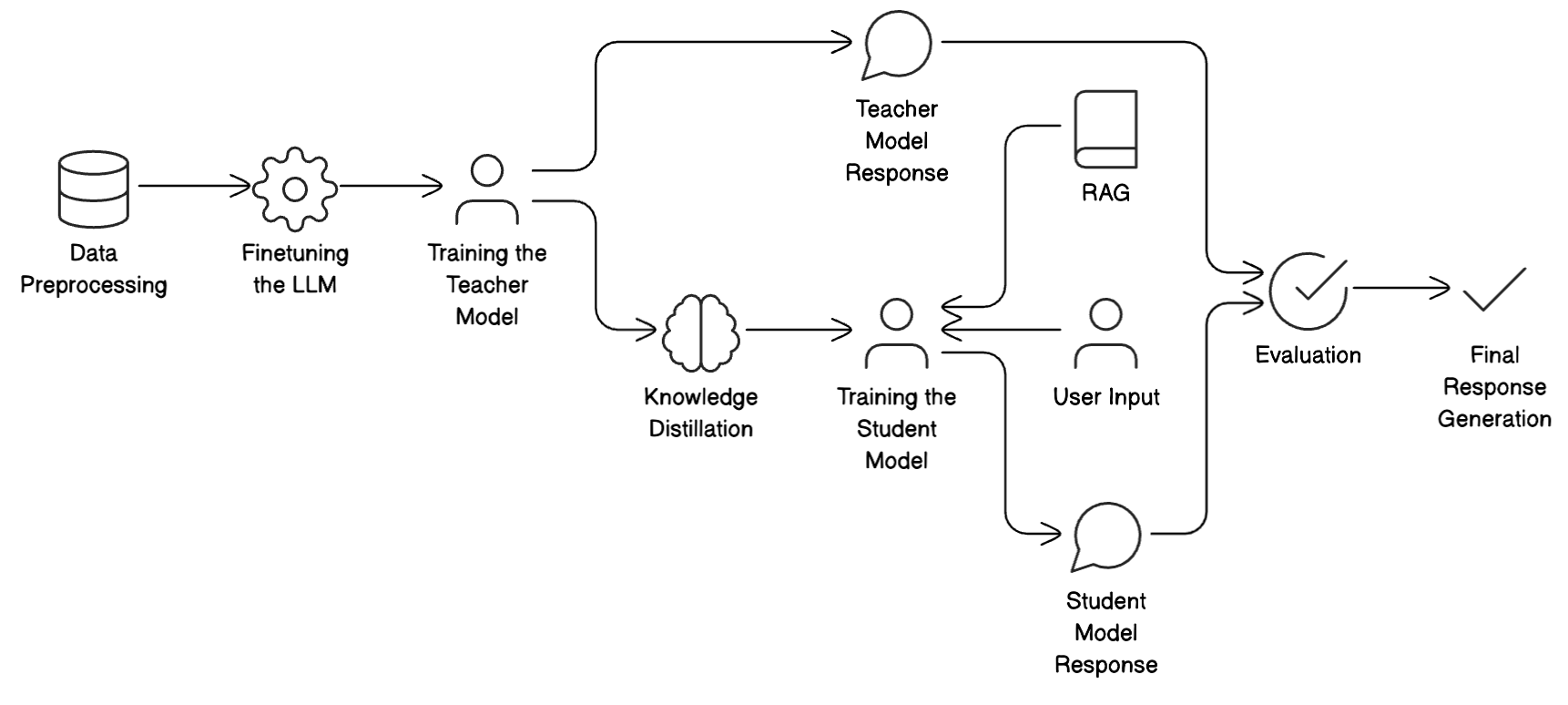

I’m a B.Tech Computer Science student specializing in Machine Intelligence & Data Science at PES University, blending technical know-how with practical experience across multiple domains. With a passion for all things tech, I’ve honed my skills in Python, Machine Learning, and the MERN Stack, along with hands-on work in the Gen AI ML space during my internship at Slang Labs, where I contributed to pioneering conversational AI solutions. I also have front-end expertise gained during a summer stint at Exam Trakker, where I developed dynamic web interfaces using Angular and NX.

I thrive in leadership roles, whether it’s heading The Alcoding Club and driving high-energy hackathons, or co-leading Shunya, where I helped orchestrate ideathons and hackathons for over 300 participants. My contributions as a web developer for AIKYA and managing operations for the Apple Developer's Group speak to my blend of technical skills and organizational expertise.

On the side, I’m a polyglot, fluent in English, Telugu, Kannada, and Hindi, with a touch of Spanish. Outside the tech world, I’m a professional inline skater, runner, and an explorer of diverse cuisines, photography, and music.

View Resume